SYLLABUS

GS-3: Awareness in the fields of IT, Space, Computers, robotics, Nano-technology, bio-technology and issues relating to intellectual property rights.

Context: Recently, the Union Minister for Electronics and IT has said that the future of Artificial Intelligence (AI) will be shaped by smaller, efficient models rather than extremely large systems.

More on the News

• Union Minister shared India’s AI roadmap during a private media interview on the sidelines of the World Economic Forum in Davos, Switzerland.

• He stated that nearly 95 per cent of global AI work is currently handled by small models.

• He said India is developing 12 AI models ranging from 50 billion to 120 billion parameters to meet enterprise needs.

• He noted that construction is underway at 10 semiconductor plants, with three already in pilot production.

• He added that four more plants are expected to enter pilot and commercial production this year.

• He highlighted emerging innovations such as sound-to-sound AI and expressed confidence in India’s global innovation role.

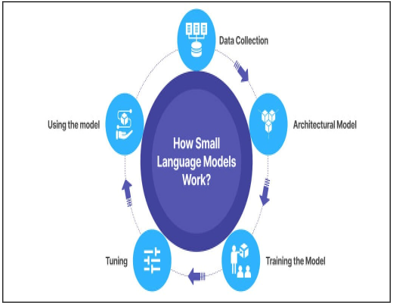

About Small Language Models (SLMs)

• Small Language Models are AI systems that process and generate natural language using fewer parameters than large models.

• Their parameter size ranges from a few million to a few billion, unlike large language models with hundreds of billions.

• Parameters are learned internal variables that shape how a model understands and responds to language.

Core Architecture and Functionality

• Transformer framework: SLMs use neural networks with self-attention mechanisms to understand context and relationships between words.

• Tokenisation and embeddings: Input text is converted into tokens that allow the model to predict the most probable next token.

• Specialised training: SLMs are trained on smaller, curated and domain-specific datasets unlike LLMs that rely on vast general datasets.

- Large Language Models (LLMs) like LLaMA, Claude, Gemini are advanced AI systems trained on massive datasets to understand and generate human-like language.

Reason why SLM being more important than LLM

• Compact and efficient: SLMs require less training data, memory, power and hardware than LLMs, making them ideal for resource-constrained environments like edge devices and mobile AI.

• Utility: They are suitable for edge devices, mobile applications and offline AI use cases.

• Greater Optimization: SLMs use the same transformer architecture as large models but are optimised for efficiency and task-specific precision.

• Domain Speciality: SLMs can be easily integrated in specialized workflows like healthcare, finance, and customer support, where broad knowledge isn’t necessary.

India’s initiatives to boost AI

• National Strategy for Artificial Intelligence (AI4ALL): It is the foundational National Strategy developed by NITI Aayog (2018) that emphasises AI for social good, not just AI for technology leadership.

• IndiaAI Mission: It is a flagship National Programme, central to India’s AI roadmap and approved by the Cabinet on 7 March 2024, with an outlay of over ₹10,370 crore over five years.

- Core Components include Compute Infrastructure, Datasets Platform, Innovation Center & Startups, FutureSkills and AI literacy, Safe & Trusted AI.

- India AI Impact Summit 2026 – flagship global gathering under IndiaAI Mission to showcase AI solutions, align industry, academia, government and civil society, and publish knowledge artifacts and casebooks for real-world AI application.

• India AI Governance Guidelines: Issued 5 November 2025, to promote safe, inclusive and responsible AI.